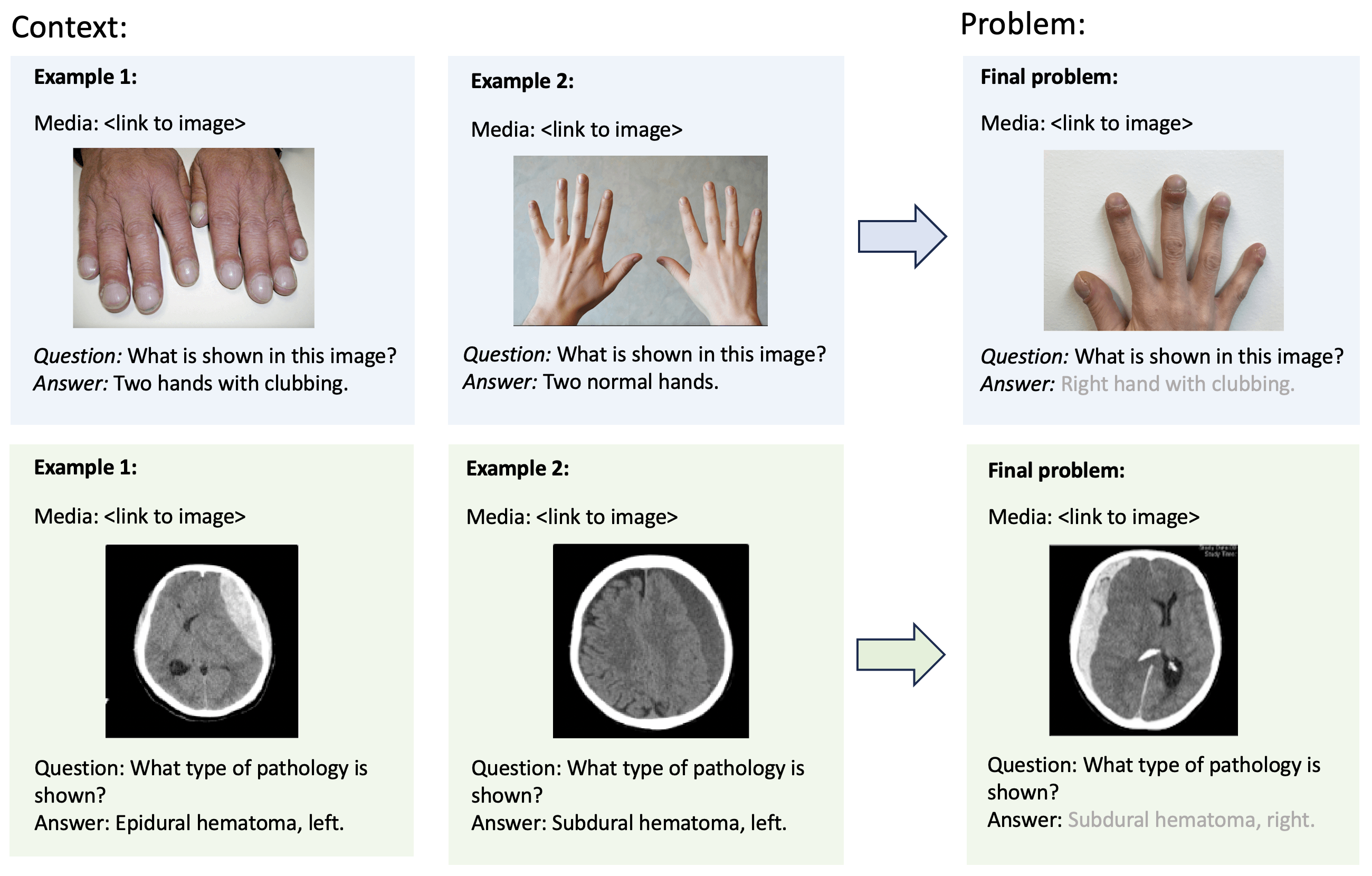

Multimodal in-context learning (ICL) remains underexplored despite significant potential for specialized domains such as medicine. Clinicians routinely encounter diverse, specialized tasks requiring adaptation from limited examples, such as drawing insights from a few relevant prior cases or considering a constrained set of differential diagnoses. While multimodal large language models (MLLMs) have shown advances in medical visual question answering (VQA), their ability to learn multimodal tasks from context is largely unknown. We introduce SMMILE, the first expert-driven multimodal ICL benchmark for medical tasks. Eleven medical experts contributed problems, each including (1) a multimodal query and (2) multimodal in-context examples as task demonstrations. SMMILE encompasses 111 problems (517 question-image-answer triplets) covering 6 medical specialties and 13 imaging modalities. We further introduce SMMILE++, an augmented variant with 1038 permuted problems. Benchmark evaluation of 15 MLLMs reveals that most exhibit moderate to poor multimodal ICL ability in medical tasks. In open-ended evaluations, ICL contributes only 8% average improvement over zero-shot on SMMILE and 9.4% on SMMILE++. Analysis reveals the importance of selecting relevant in-context examples: one noisy or irrelevant example can result in average performance reductions of up to 9.5%. We also identify a recency bias in MLLMs, where placing the most relevant example last in the example list can lead to substantial improvements in performance. SMMILE thus highlights critical limitations and biases in current MLLMs when learning multimodal medical tasks from context.

@article{rieff2025smmile,

title={SMMILE: An Expert-Driven Benchmark for Multimodal Medical In-Context Learning},

author={Melanie Rieff and Maya Varma and Ossian Rabow and Subathra Adithan and Julie Kim and Ken Chang and Hannah Lee and Nidhi Rohatgi and Christian Bluethgen and Mohamed S. Muneer and Jean-Benoit Delbrouck and Michael Moor},

year={2025},

eprint={2506.21355},

archivePrefix={arXiv},

primaryClass={cs.LG},

url={https://arxiv.org/abs/2506.21355},

}